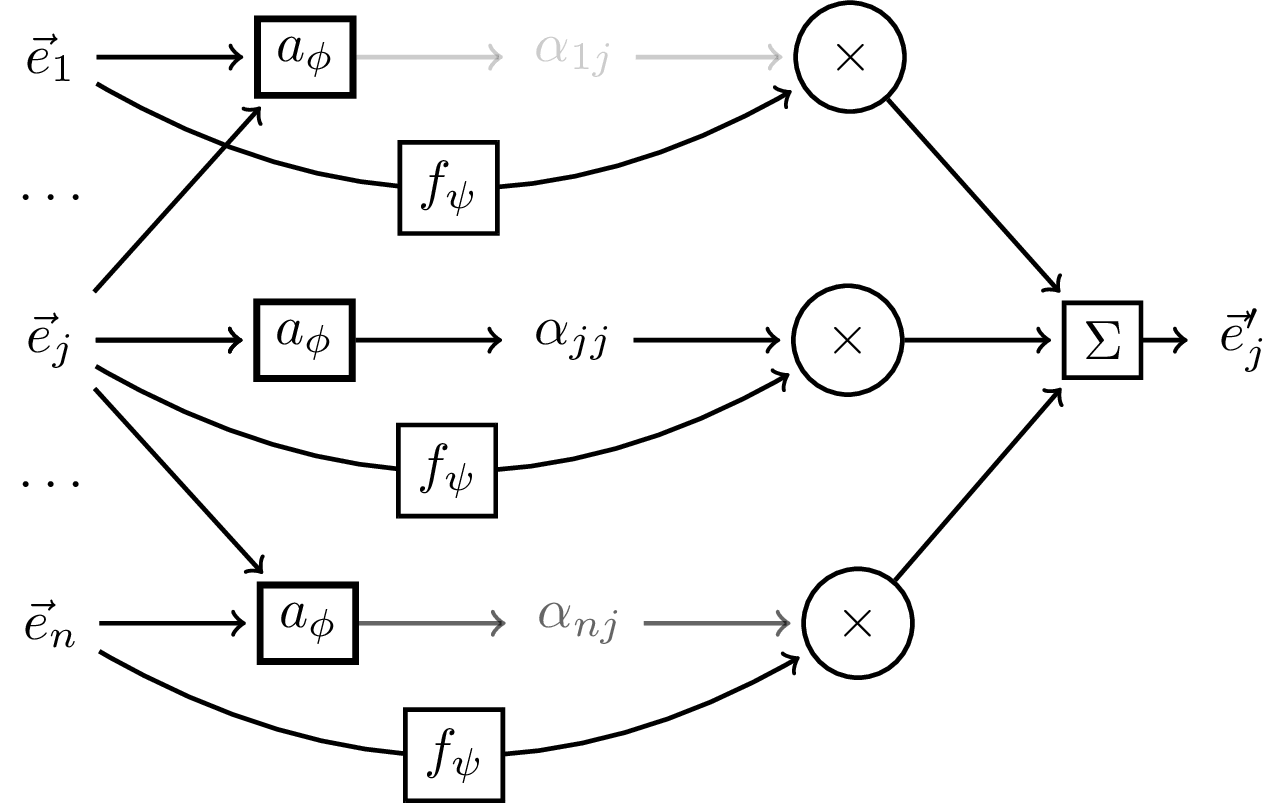

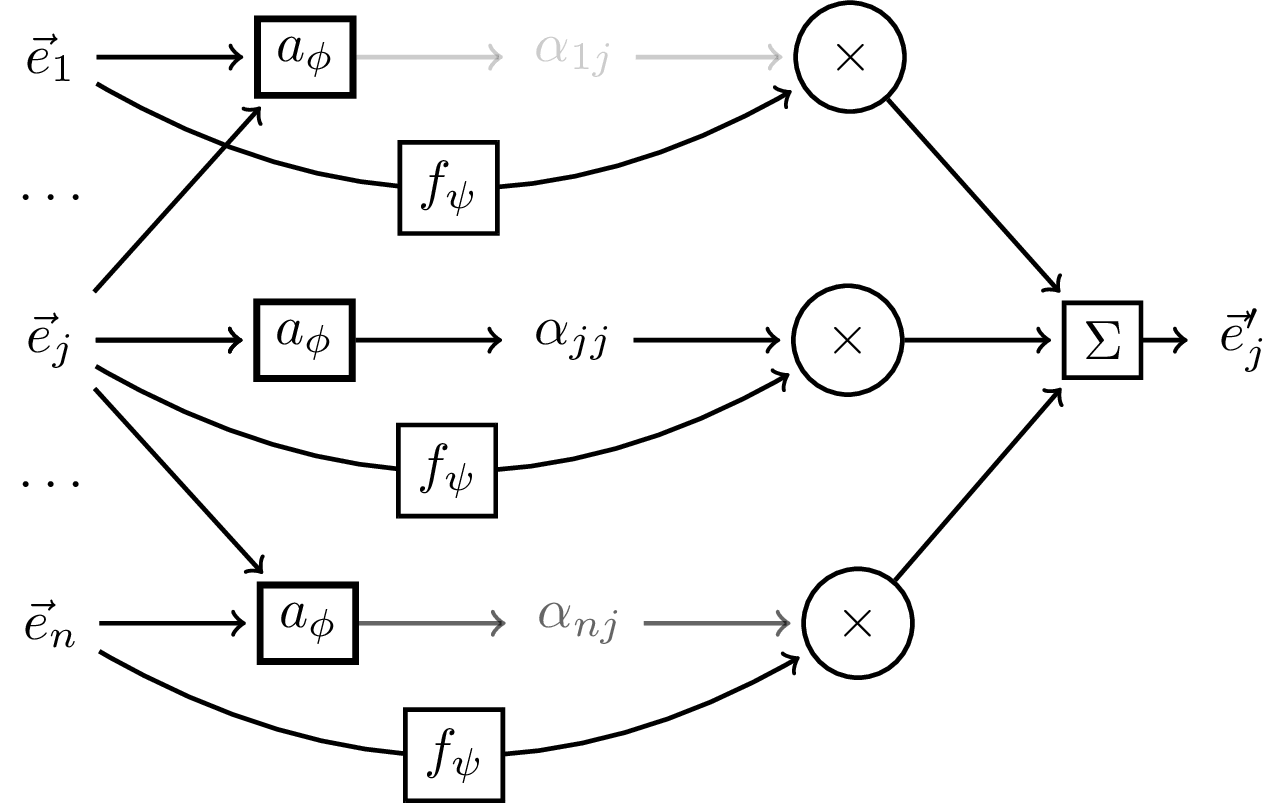

Self Attention

Creator: Petar Veličković (original)

Illustrating the attention mechanism from arxiv:1706.03762.

Download

Code

self-attention.typ (112 lines)

self-attention.tex (52 lines)

Creator: Petar Veličković (original)

Illustrating the attention mechanism from arxiv:1706.03762.