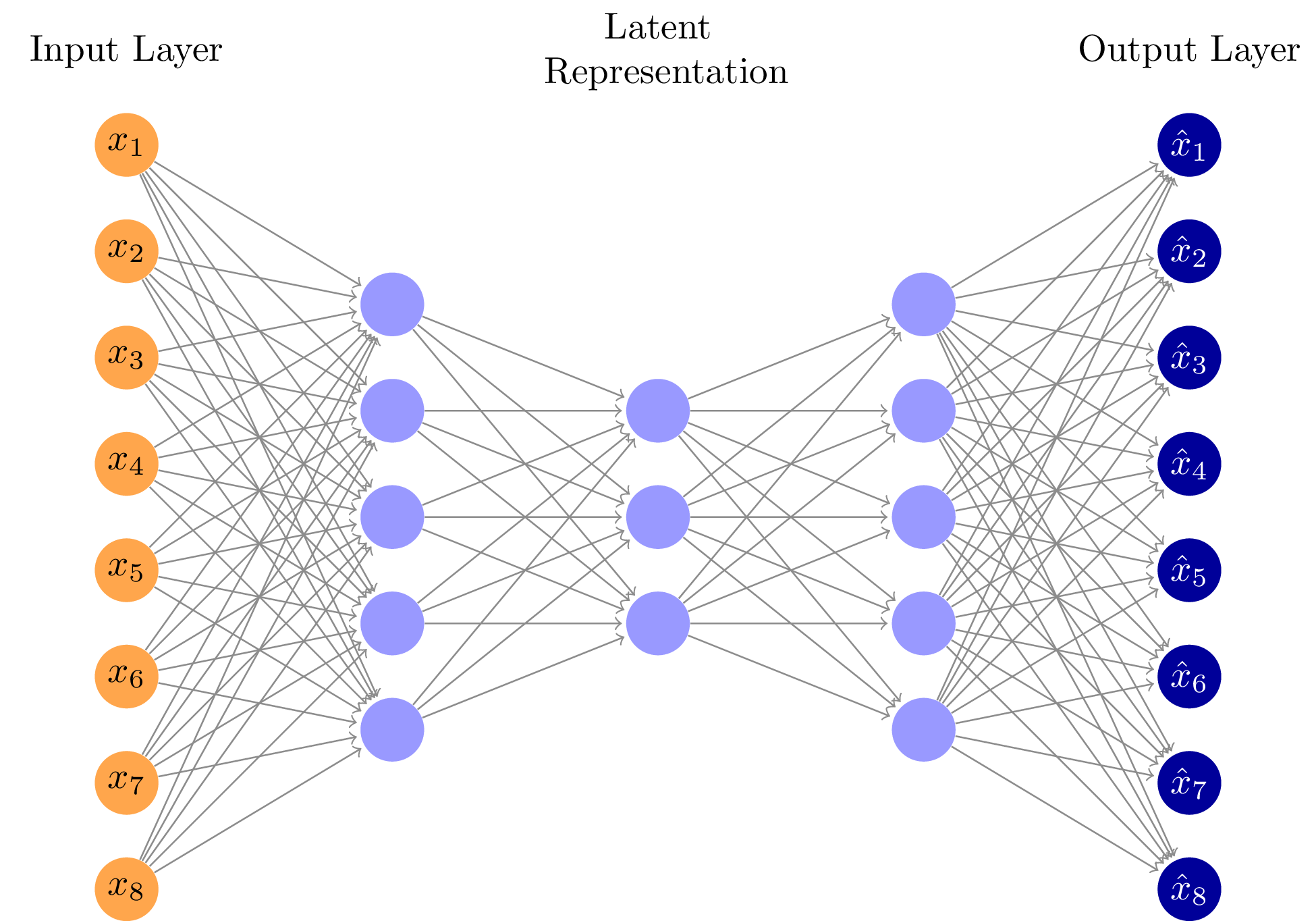

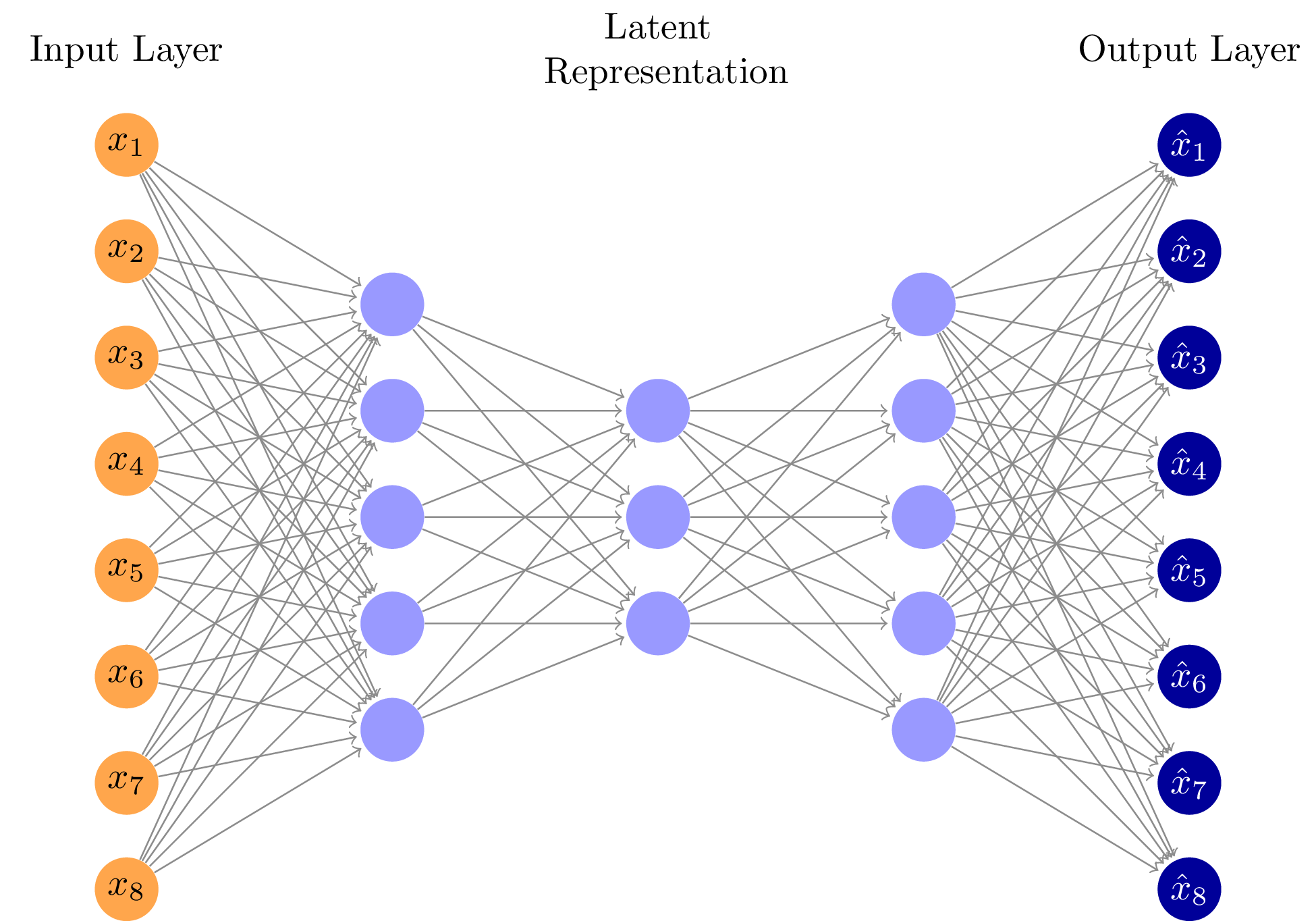

Autoencoder

Variational autoencoder architecture. Made with https://github.com/battlesnake/neural.

Download

Code

autoencoder.typ (61 lines)

autoencoder.tex (29 lines)

Variational autoencoder architecture. Made with https://github.com/battlesnake/neural.